Warning: This article contains offensive language references and screenshots.

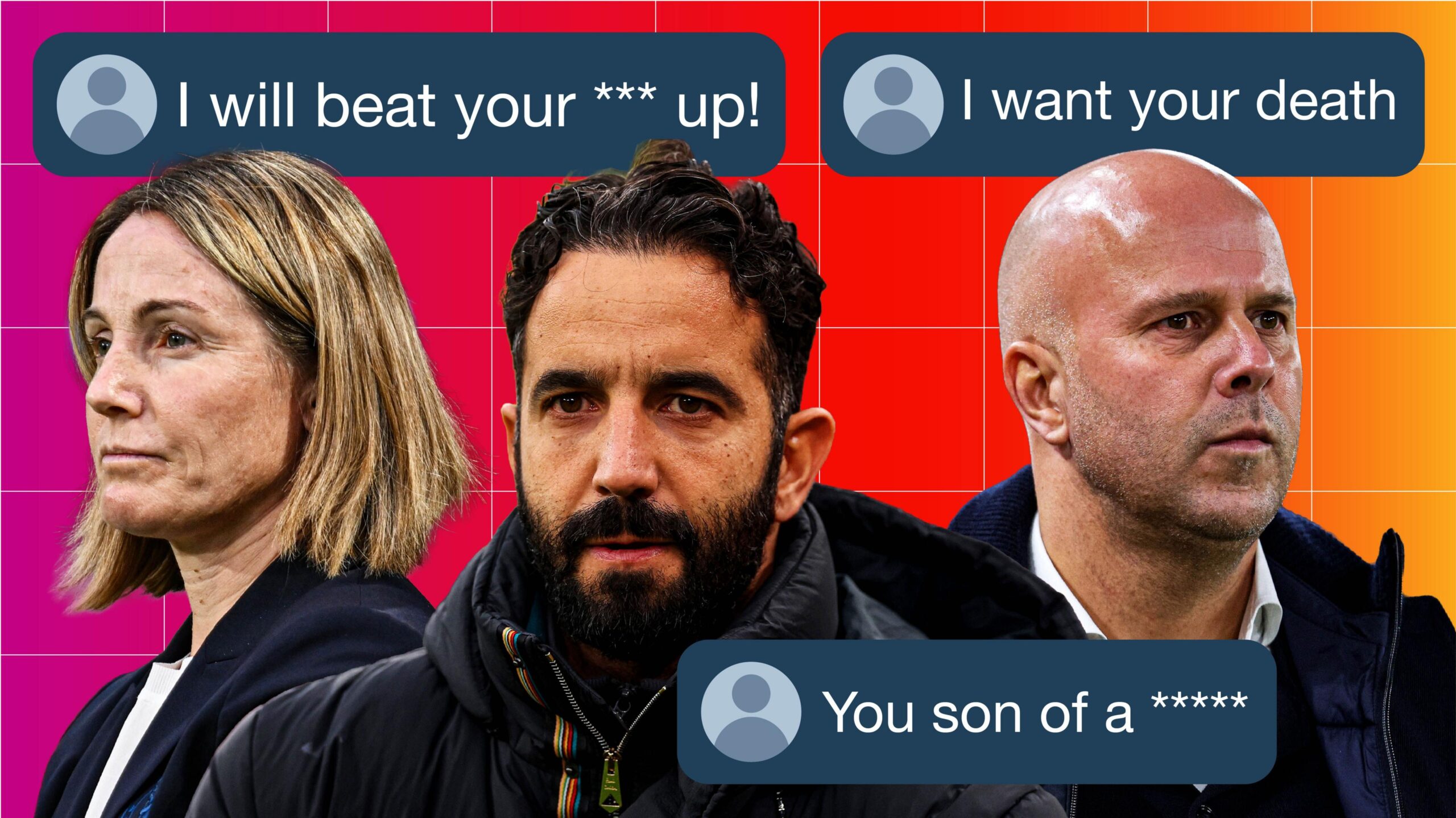

A BBC investigation has revealed that more than 2, 000 abusive social media posts were sent to Premier League and Women’s Super League managers in one weekend, including death threats and rape threats.

Posts posted during 10 Premier League and six WSL games on the weekend of November 8 and 9 were the subject of an analysis that was carried out with data science firm Signify.

With 82% of abusive posts on X, formerly Twitter, managers received more abuse than players.

The men’s top flight’s most violent managers were Ruben Amorim, Arne Slot, and Eddie Howe, while Chelsea and Sonia Bompastor, their manager, received 50% of all abuse in the WSL.

JavaScript must be enabled in your browser to play this video.

People feel like they are being treated unfair online.

According to Liverpool head coach Slot, “Abuse is never a good thing, whether it’s about me or other managers.”

We anticipate being criticized. That is entirely normal. I don’t see it because I don’t have social media, but I do know it exists.

Threat Matrix, an artificial intelligence tool used by Signify, searches social media posts for abuse.

It analyzed more than 500, 000 posts on X, Instagram, Facebook, and TikTok over the weekend of matches chosen for the study, and it found 22, 389 potentially abusive messages.

However, those flags occasionally contain examples that aren’t abusive. For instance, references to Newcastle defender Dan Burn can be flagged as a threat due to his surname.

BBB Sport

1 of 7

Thirty-nine posts, including rape threats and monkey emojis on black players’ accounts, were deemed to be serious enough to warrant further investigation, which may involve reporting to football clubs for potential fan identification and referral to law enforcement.

Police received one report, and they decided it lacked the necessary threshold for further action.

Only one of the posts that Meta, which owns and runs Facebook and Instagram, removed, was flagged as unrelated. The investigation continues for the others.

Some of those who were identified as being in the X network were removed, while others were left online and had their reach restricted.

According to Professional Footballers’ Association CEO Maheta Molango, “if this happened on the street, it would have criminal consequences and potentially have financially ill effects.”

Why do people feel like they are protected online, exactly? This needs to end, we must put an end to.

Some players are conscientious that abuse is inevitable.

Newcastle manager Howe said, “It comes with the territory now.”

“My advice is to always try to keep your mind afloat and develop psychological resilience so you don’t have to read it.” However, it’s always that people will show it to you even if you don’t want to.

While X has introduced a tool that displays the country or continent on which an account is based, Meta has implemented tools to help block and filter abuse.

“Really awful, violent content”

The small Signify team quietly combs through thousands of posts in a central London office.

The number of abusive messages rises with each new game.

Despite being solely fueled by actions on a football pitch, there have been numerous up-and-coming posts with racist slurs, threats of rape against managers’ families, and even death threats.

Humans only consider messages that violate social media platforms’ own internal rules to be verified abuse if the AI system determines they are abusive twice.

The most significant increase in abusive posts took place on October 8 during Tottenham’s dramatic 2-2 draw with Manchester United, a game that featured two stoppage-time goals, which caused the teams’ managers and several players to be subject to intense abuse.

Death threats were made to Amorim in messages obtained by the BBC, including one that read, “Kill Amorim, someone get that dirty Portuguese.”

Social media platforms are subject to a statutory duty of care under the Online Safety Act, which became effective in October 2023.

That entails them being legally required to prevent illegal content like harassment or hate speech, such as threats and harassment. The independent regulator that oversees platforms’ compliance is now called OFCOM.

However, the social media platforms claim that they are reluctant to censor or remove content because of their free speech.

Signify asserts that the issue of online threats and serious abuse is getting worse.

According to its CEO, Jonathan Hirshler, “We’ve seen around a 25% increase in the levels of abuse we’re detecting.”

“We understand the platforms’ position on free speech, but some of the topics we’re addressing are so egregious.

JavaScript must be enabled in your browser to play this video.

Homophobia and threats in WSL

The vast majority of the 97 verified abusive messages posted about WSL matches were caused by controversy during Chelsea’s 1-1 draw at Arsenal on November 8.

Bompastor, Chelsea’s boss, was threatened with violence, and a homophobic slur was used in more than half of the interviews.

“People believe they can say anything from the screen,” the poet said. I want to speak out against that because it’s frightening.

My family includes children, too. They object to those online comments. Because they are so young, people need to understand how it might affect them.

Because the security in the women’s game differs from that in the men’s game, “terrors are a big problem.”

“Abuse can actually lead to mental health issues for players,” the author says. It has a lot of potential.

Jess Carter, an ex-Chelsea player and current England defender, was the target of significant racist abuse during Euro 2025, according to Bompastor, who believes the platforms are at the center of it.

According to Bompastor, “the social media companies are not carrying out their duties or taking accountability.”

“I believe we will be in this situation for too long if we have to wait for them to act.”

How does abuse prevention work in clubs?

More clubs are taking matters into their own hands as the anger between social media companies grows.

In the three years that Arsenal has collaborated with Signify, there has been a 90% decrease in affiliated fan abuse of their own players, coaches, and owners.

Signify attributes this to Arsenal’s ongoing efforts, including putting in educational programs and outlawing supporters from the Emirates who have been identified as abuse senders.

The same company is now employing Chelsea women.

Meanwhile, Tottenham is looking into allegations that visitors to their home base posted abusive content.

Premier League content protection director Tim Cooper stated, “We’re constantly monitoring around games where abuse can occur and we’re looking for triggers like goal-scoring, missed penalties, or even things like yellow and red cards.”

“The platforms can alter their algorithms to do more,” they claim. That would be a positive step.

related subjects

- Premier League

- Football

- Women’s Super League

Source: BBC

Leave a Reply